Enhance AI with Your Content: A Step-by-Step Guide¶

Capture Wallet powered by Numbers Protocol plays a crucial role in building ethical AI with user-owned and user-controlled dataset. Combining AssetTree (metadata) and Nid (decentralized content storage) together, digital content (image, video and music files) in the Capture wallet forms a robust training dataset for AI. This setup allows AI models to access user content securely, with explicit wallet permission and AI training consent.

By adhering to ERC-7517, it filters content that allows AI training using miningPreference field in the AssetTree , further protecting the creators' rights and ensuring ethical AI practices.

The page demonstrates the use case that developers can utilize Capture, HuggingFace, and Colab to train your own models with your registered assets and generate new AI inference images. By following these steps, you can leverage our service to enhance your creative capabilities and produce high-quality images. By filtering content following ERC-7517,

Access Colab with Hugging Face Diffusers¶

- Click on the provided Colab link to open it in your web browser.

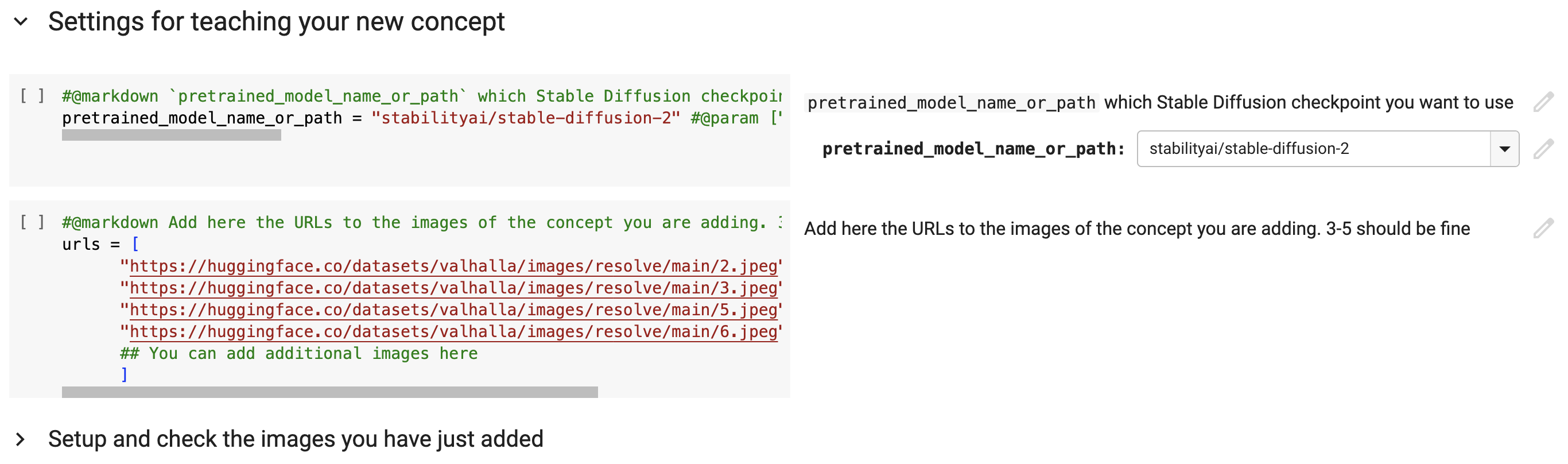

- Navigate to the urls in the

Settings for teaching your new conceptsection.

Before

Access Data From Capture Wallet¶

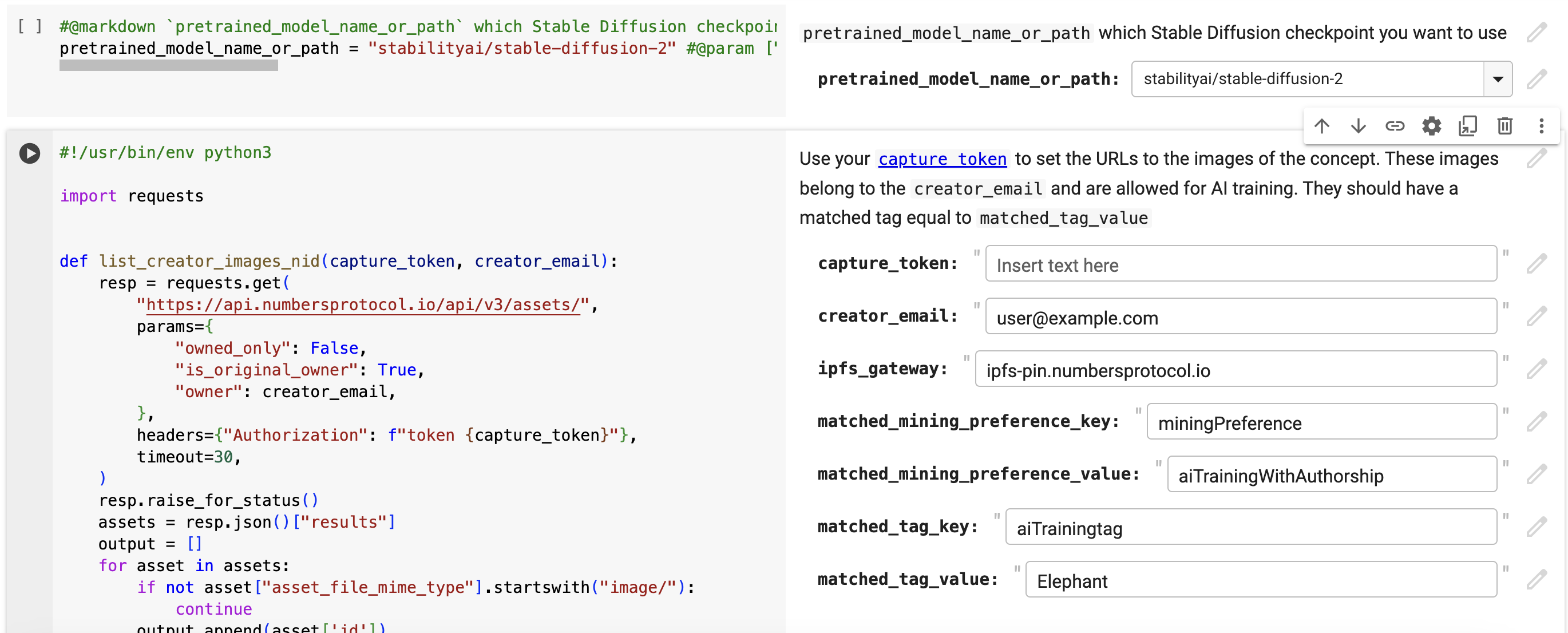

- Replace the entire code block labeled 'urls' with the provided code. The code will list links to the creator's images that match the configured mining preference and AI training tag.

import requests

def list_creator_images_nid(capture_token, your_capture_email):

resp = requests.get(

"https://api.numbersprotocol.io/api/v3/assets/",

params={

"owned_only": False,

"is_original_owner": True,

"owner": creator_email,

},

headers={"Authorization": f"token {capture_token}"},

timeout=30,

)

resp.raise_for_status()

assets = resp.json()["results"]

output = []

for asset in assets:

if not asset["asset_file_mime_type"].startswith("image/"):

continue

output.append(asset['id'])

return output

def filter_matched_urls(

nids,

capture_token,

ipfs_gateway,

matched_mining_preference_key,

matched_mining_preference_value,

matched_tag_key,

matched_tag_value,

):

output = []

for nid in nids:

resp = requests.get(

"https://e23hi68y55.execute-api.us-east-1.amazonaws.com/default/get-commits-storage-backend-jade-near",

params={"nid": nid},

headers={"Authorization": f"token {capture_token}"},

timeout=30,

)

resp.raise_for_status()

commits = resp.json()["commits"]

if not commits:

continue

payload = []

for commit in commits:

payload.append({"assetTreeCid": commit["assetTreeCid"], "timestampCreated": commit["timestampCreated"]})

resp = requests.post(

"https://us-central1-numbers-protocol-api.cloudfunctions.net/get-full-asset-tree",

json=payload,

headers={"Authorization": f"token {capture_token}"},

timeout=30,

)

resp.raise_for_status()

assetTree = resp.json()["assetTree"]

if assetTree.get(matched_mining_preference_key) != matched_mining_preference_value:

continue

if assetTree.get(matched_tag_key) != matched_tag_value:

continue

output.append(f'https://{ipfs_gateway}/ipfs/{nid}')

return output

# @markdown Use your [`capture_token`](https://docs.captureapp.xyz/capture-account-and-wallet/) to set the URLs

# @markdown to the images of the concept. These images belong to the `creator_email` and are allowed for AI training.

# @markdown They should have a matched tag equal to `matched_tag_value`

capture_token = "" # @param {type:"string"}

creator_email = "[email protected]" # @param {type:"string"}

ipfs_gateway = "ipfs-pin.numbersprotocol.io" # @param {type:"string"}

matched_mining_preference_key = "miningPreference" # @param {type:"string"}

matched_mining_preference_value = "aiTrainingWithAuthorship" # @param {type:"string"}

matched_tag_key = "aiTrainingtag" # @param {type:"string"}

matched_tag_value = "Elephant" # @param {type:"string"}

urls = filter_matched_urls(

list_creator_images_nid(capture_token, creator_email),

capture_token,

ipfs_gateway,

matched_mining_preference_key,

matched_mining_preference_value,

matched_tag_key,

matched_tag_value,

)

After

- Make sure to complete the fields: fill in

capture_tokenwith your Capture token,creator_emailwith your Capture email, andmatched_tag_valuewith the tag assigned to assets that will be used in this training.

Configure Training Parameters¶

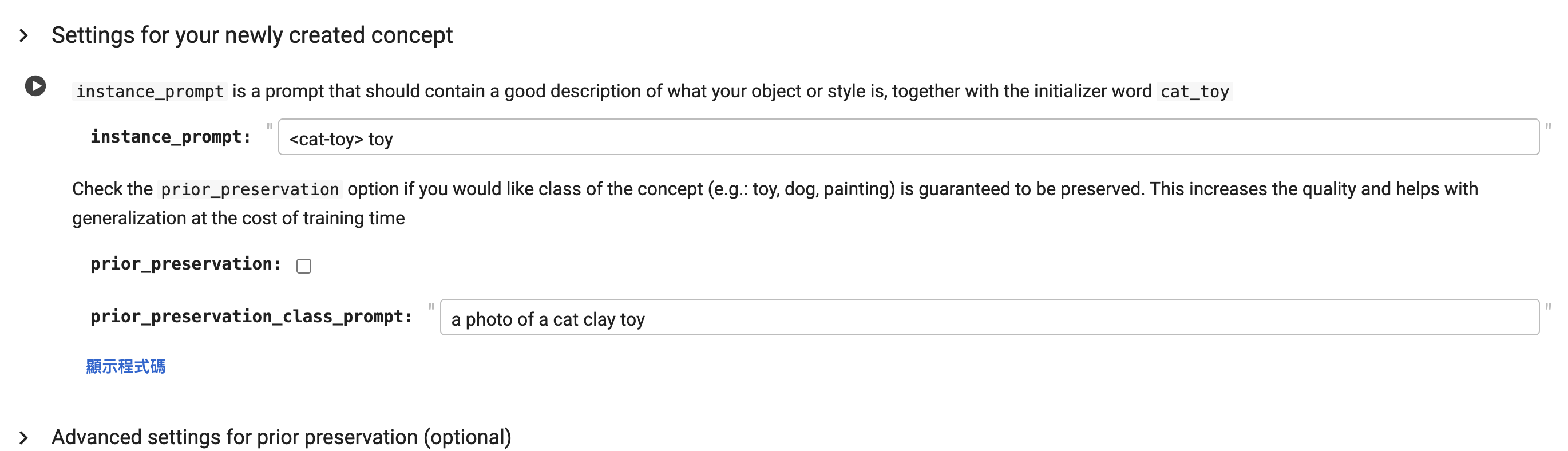

- Configure the

instance_promptin theSettings for your newly created conceptsubsection.

- Navigate to the

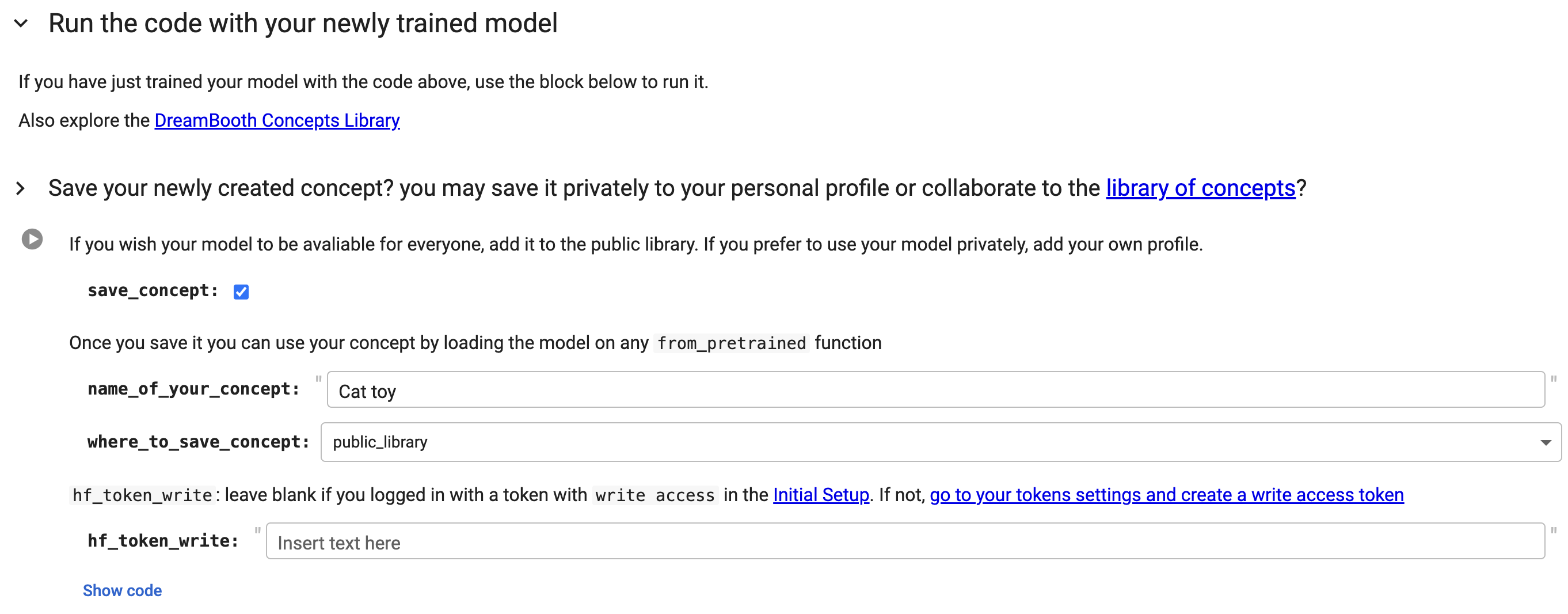

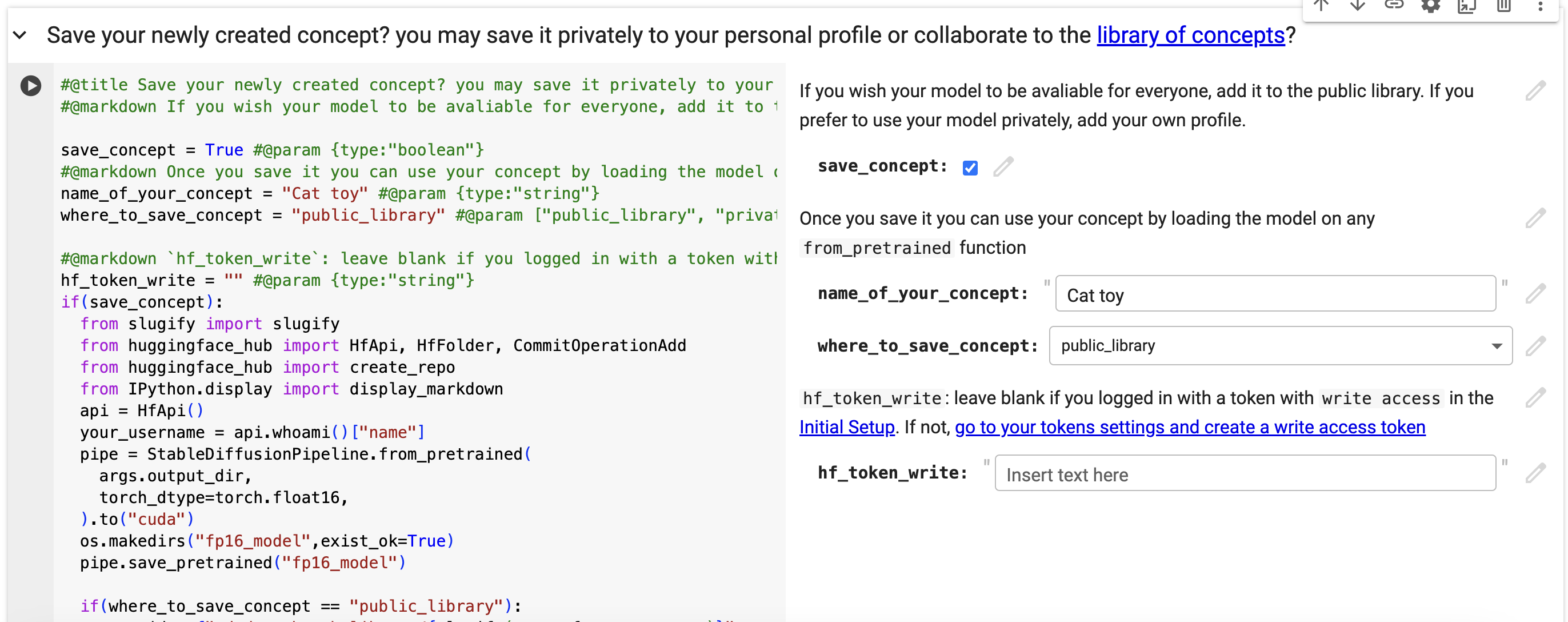

Run the code with your newly trained modelsection. Ensure to tick thesave_conceptoption, specifyname_of_your_concept, choose where_to_save_concept, and inputhf_token_writewith your Hugging Face token in theSave your newly created concept?subsection.

- Click on

Show codeand replace the codeapi = HfApi()withapi = HfApi(token=hf_token_write)in the same section.

Train Your Model¶

- Run each subsection in the

Initial setup,Settings for teaching your new concept, andTeach the model the new concept (fine-tuning with Dreambooth)sections to train your model.

Improve training data (Optional)¶

-

Dreambooth automatically crops center square images. If your target isn't centered but you want to refine the training data, here's what to do after you've completed the

Setup and check the images you have just addedsubsection but before moving on toSettings for your newly created concept:- Download the images from Colab to your local machine.

- Remove the original images from Colab.

- Manually crop the images as necessary and save them locally.

- Once cropped, upload the newly saved images back to Colab.

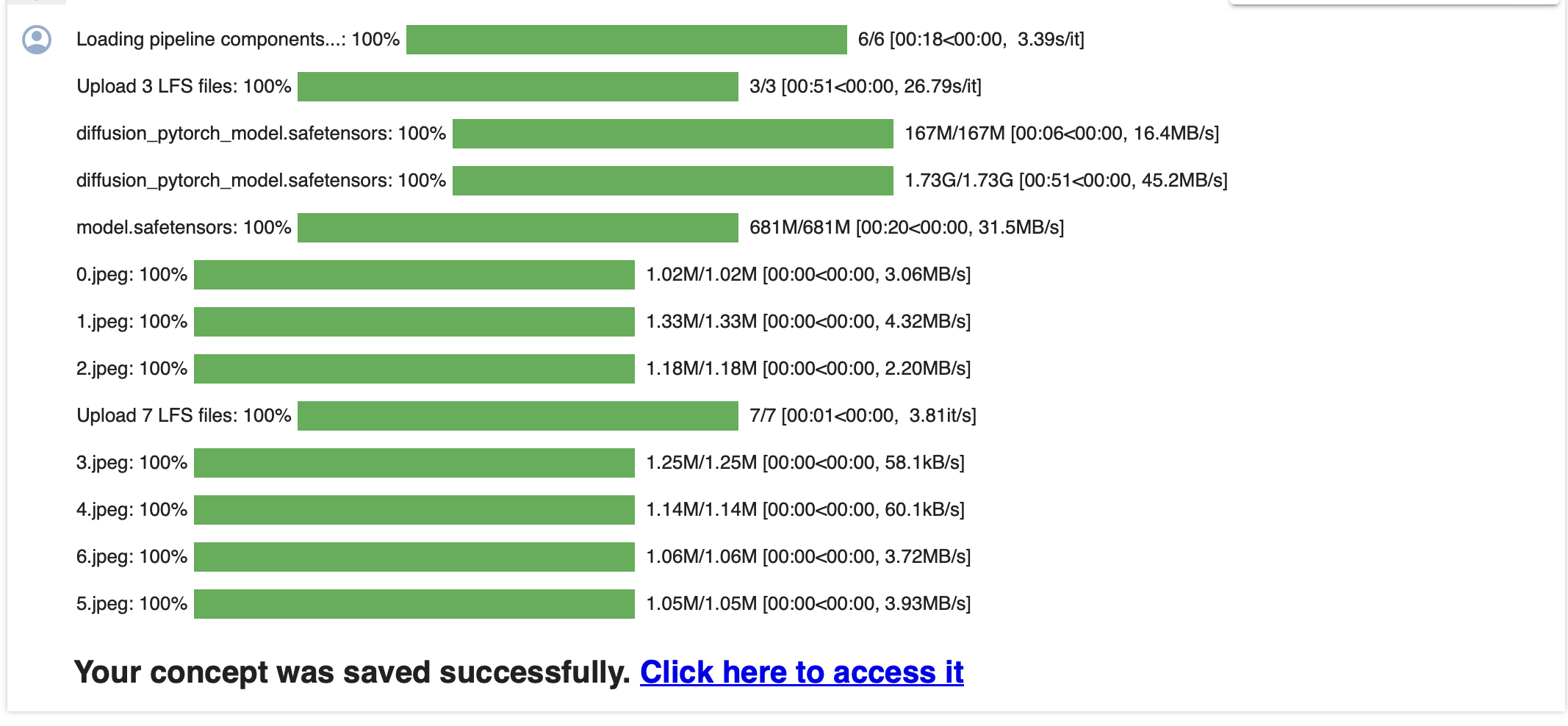

Save Your Model¶

- Run the

Save your newly created concept?subsection in theRun the code with your newly trained modelsection to save your trained model.

Generating AI Inference Images¶

- Click the link

Click here to access itto open the Hugging Face model.

- Run the

Text-to-Imagetask to generate a new image.

Examples¶

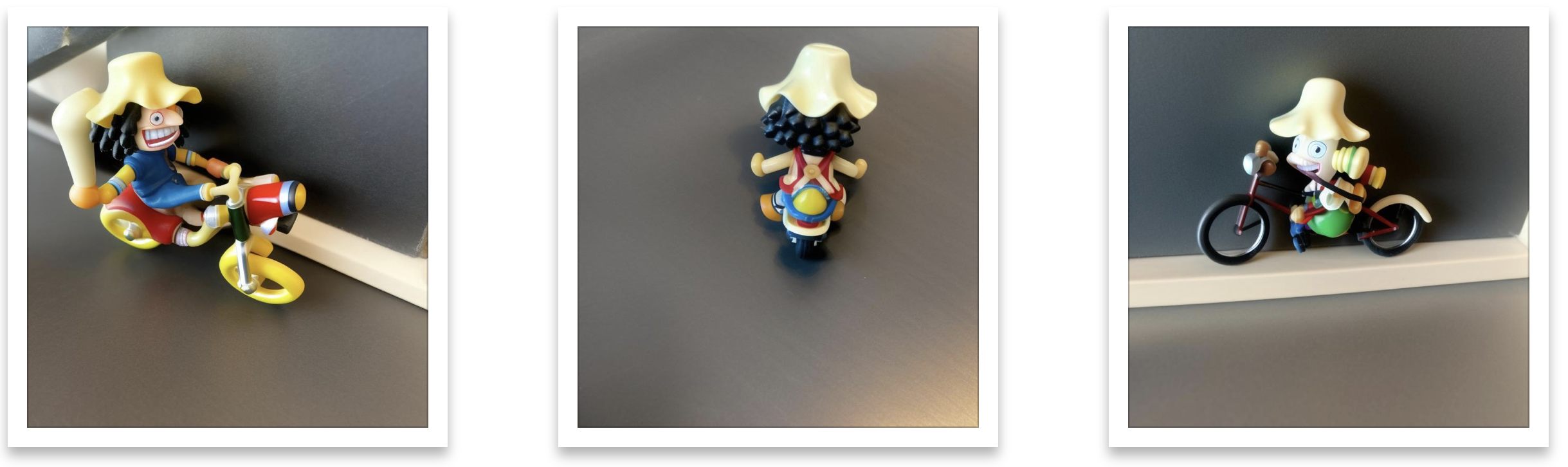

- Usopp toy

creator_email: [email protected]

matched_tag_value: Usopp

instance_prompt: Usopp toy

Text-to-Image: The Usopp toy is riding a bicycle

Concept Images

Inference Images

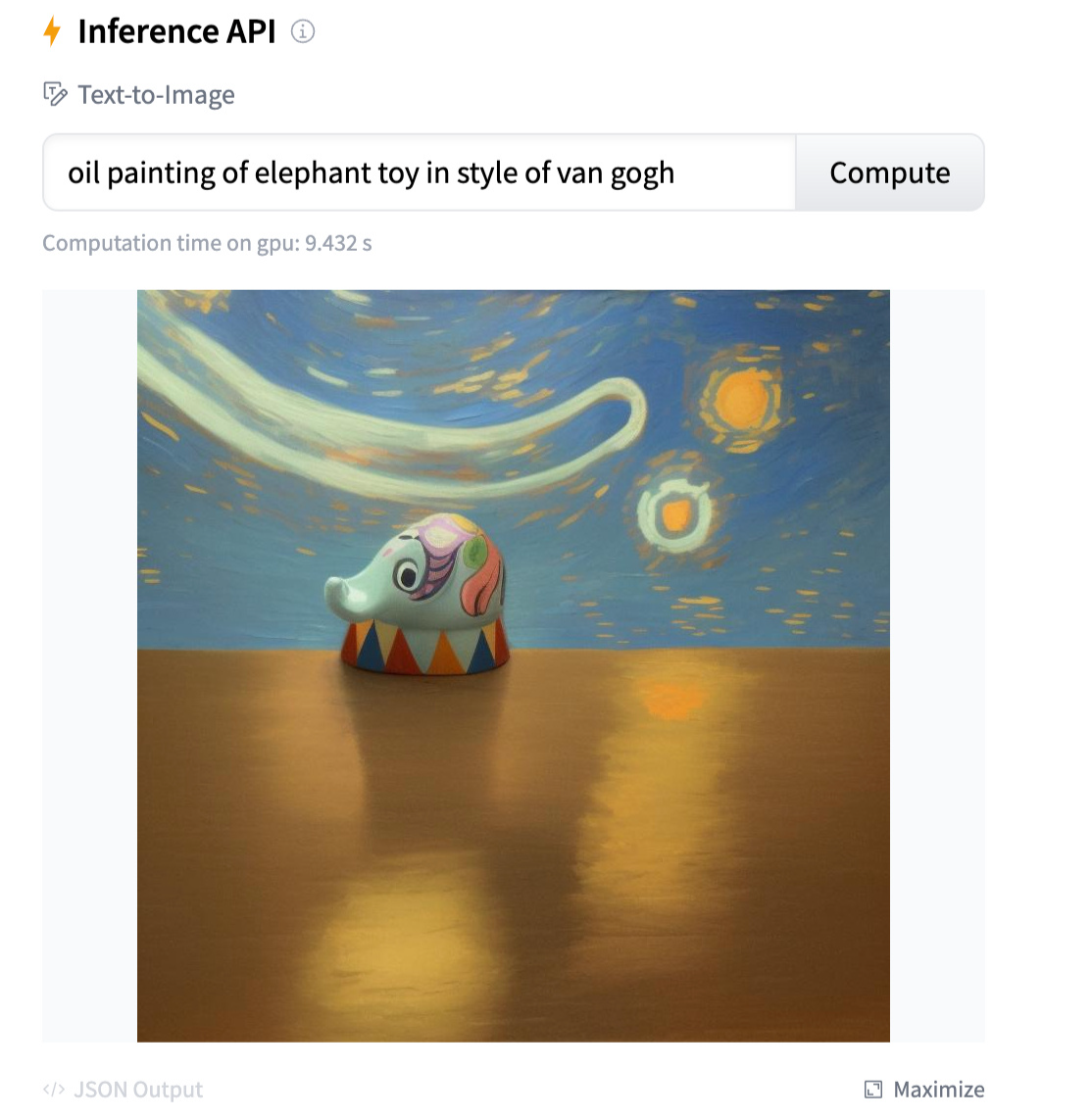

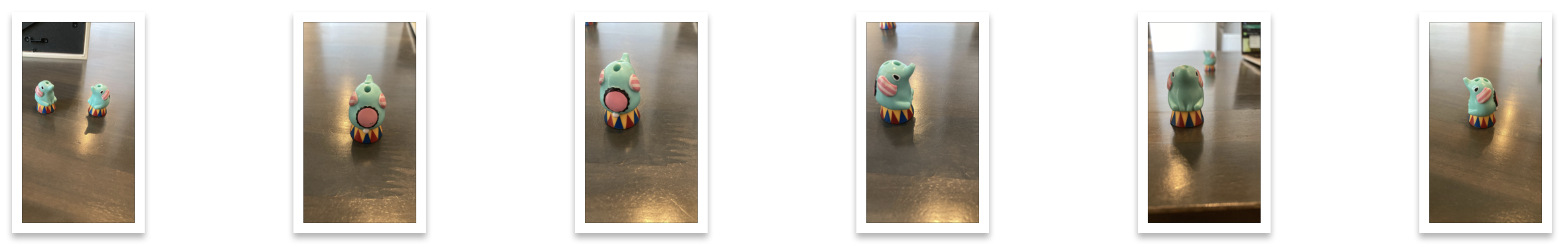

- Elephant toy

creator_email: [email protected]

matched_tag_value: Elephant

instance_prompt: elephant toy

Text-to-Image: oil painting of elephant toy in style of van gogh

Concept Images

Inference Images

- Lion toy

creator_email: [email protected]

matched_tag_value: Lion

instance_prompt: sks toy

Text-to-Image: A cat doll resembles a sks toy

Concept Images

Inference Images

- Usopp toy (Improve training data)

creator_email: [email protected]

matched_tag_value: Usopp

instance_prompt: Usopp toy

Text-to-Image: The Usopp toy is riding a bicycle

Concept Images

Inference Images